Background

As an organisation, there will always be cybersecurity issues that you will need to prioritise and address. Everyone has them from the most secure places (probably Amazon, Google, Apple, Microsoft, and hopefully our government institutions) to the palaces that you and I may work or run.

No organisation, not even the best, ever gets rid of all their security issues, but they do make an effort to create a complete list of them, and then fix the worst ones. And there is the challenge, what are the “worst ones”? This sounds simple, but is deceptively hard.

In this blog post I will go through many of the things to consider when trying to identify and make a prioritisation of the security issues, and at the end make some tool recommendations based on our experience at S4 Applications.

Don’t forget to check out many of the other blogs that we have written about security applications and protecting your business.

Why is Prioritisation Important for Cyber Security Issues?

Well, the main issue is that you cannot fix all of the Cyber Security Issues within your organisation. The main reasons that we see for not fixing the issues are:

- Limited resource / not enough time to do the work

- Upgrading one piece of software (say Java) would then take another piece of software out of its supported environment

- No fix is available

With prioritisation you get the “biggest bang for the buck”, i.e. the greatest increase in security for the least effort.

Let’s use risk …

A good way to assess how critical an issue is will be to see where it sits in a list of priorities based on risk.

Now some will say that:

Risk = Probability of something happening x $ cost of the impact

Which is a good definition of risk, but it doesn’t really help here with prioritisation. Trying to put a number to either of those two items is really, really hard, nearly impossible. Instead, we like to suggest using relative risk, which is actually much easier to measure.

Consider a bank, they may not be able to tell you either of those metrics for the customer database, but they can put the following systems in order of importance:

- On-line banking

- Products a customer has / customer database

- Staff holiday information

- Branch opening times

The above list is reasonably easy to put together just by looking at it. We find that some objective questions like the ones below can also help:

- Is the system subject to PCI-DSS?

- Does the system hold Personally Identifiable Information (PII)?

- Does the system hold health data?

All these questions add clarity to the situation and remove subjectiveness when it comes to prioritisation. The more boxes that an application ticks, the more important it becomes. There is also a precedence on those questions; health data is more important than PII and so on.

It is all about the context …

If I say, “at home I have a car tyre that is so worn down so much, that it is almost like a mirror”, then most people would say that this represents a big risk. The truth of the matter, is that the tyre is on a child’s swing, so actually represents no risk whatsoever. A simple anecdote, but it illustrates that it is the context that drives the risk and how you should set prioritisation.

The same is true with cyber security issues. Is the security issue on an Internet facing server, or on the development guy’s test network? It is the context that drives the risk and the prioritisation.

It is worth considering the properties that we should take into account when prioritising a security issue, here are some suggestions of things to look at

Context includes

- Properties of the Cyber Security Issues – this is the easiest, and often the tools that find the issues provide the score for you. A network scanner like Nessus will give you a CVSS score from 1 to 10. For application issues it is slightly more complex, but not much.

- What the world is doing with the Cyber Security Issue – this is most appropriate for CVE vulnerabilities because there is a whole threat intel industry available to provide this information.

- Properties of the machine – is this an internet facing machine? In a secure data centre? Sat on a users’ desk?

- Properties of the application / service it provides – as discussed above, not all applications are equal, and this is best done using objective metrics that work within your business.

Changes over time

To take a hard problem and make it harder, you have to remember that things change over time. For me, this was most evident with the Bluekeep problem. This was a cyber security Issue that Microsoft found in their Remote Desktop Protocol back in 2019. Microsoft found this and released a patch.

Microsoft also said, that in their opinion, it had the potential to allow un-authenticated remote access. The good news is that getting that unauthenticated remote access was very hard to do. As soon as Microsoft released the patch, all the security researchers, both white hat and black hat, started to try and exploit it.

Reasonably quickly, they came up with a way of making the server hang, but unauthenticated remote access actually took a couple of months to get up and running consistently.

During that period the threat intel people were watching both the white hat and black hat people very closely. It was initially a “potential problem”, then as the tools got better, the priority of the item went up and it became a “real problem”. Then there was a massive explosion of people trying to exploit it. People are now generally patched so the black hat guys have moved onto the next thing.

The risk of this item affecting your organisation went through a curve, from low, to medium and high, then back to medium. You need this in your calculations too.

Reasonable Distribution

There is no point in having everything as 99 out of 100, so you need to apply maths to the problem to get a reasonable distribution. Not a hard math problem, but it does need doing.

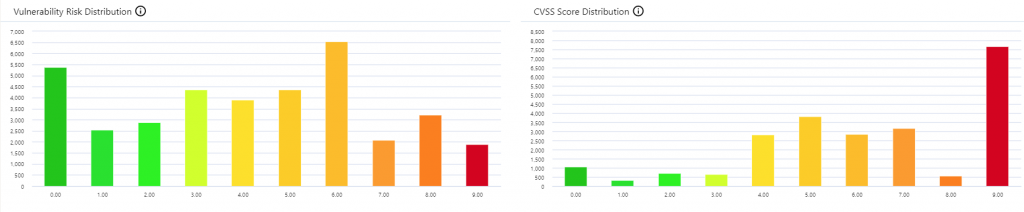

The graphs below are the same vulnerability data, once represented using just CVSS score, the other using all business metrics to prioritise ad distribute.

As you can see the first once is much easier for all teams to deal with. The second graph basically means that everything is urgent, which is something that an organisation just cannot easily deal with.

Bigger than it initially looks …

This article is part of a 4-article series all of which look at different parts of the same problem. Here we have focused on one area, and neatly skipped over some other, rather complex issues.

We see the 4 key pillars to a complete vulnerability solution encompassing the following high-level functionality:

Prioritisation of issues – discussed in this blog

Integration of data – Saying “connect to my DAST tool and extract the data” is much easier to write than to implement, as is aligning data from DAST, SAST and network scans into a single, browsable data set that allows you to navigate from application, to server to vulnerability and so on.

Visualisation and dashboard reporting – As much as things are automated, people don’t always do what you expect in the way you expect; for this reason, you need reports, dashboards and other ways of understanding what has happened to get you where you are now, what is happening at that point in time, and where you need to go in the future to achieve your goals and objectives

Automation – IT Security people are expensive, so you want to use this valuable resource as sparingly as possible. Automation removes people from most of the process allowing them to focus on what really matters and allowing the machine to make the remaining decisions.

You will find that we discuss all 4 items in the article on Vulnerability Management Program.

Security vendors don’t help

The security product vendors all have a reasonable idea of the above and are all building a suite of tools to address parts of the problem. The issue is that most vendors have one good tool and a bunch of mediocre tools that tag along.

If I look at Qualys (I am not picking on them, insert Tenable, Rapid7 etc., they are all the same), their network scanning tool is genuinely market-leading. The other 20+ that they have range from “ok” to “hmm, not bad”. They exist together to meet a sales objective of “we need more products to sell to our existing customers”.

Be wary of vendor lock-in business models.

Several years ago, software vendors would sell a perpetual license to their software; this was expensive in year 1, but less so in year 2+. This created a degree of vendor lock-in, because if you wanted to move, then you had to pay that big up-front fee again.

The vendors then worked out that they could make more money by selling annual subscriptions (which is where everyone now is). This has the advantage that, as a user, you can switch if a vendor falls behind the market or gets too aggressive with their pricing.

This burned a number of vendors, so they now look for other ways to generate lock-in. One of those is selling a set of loosely connected, mediocre tools. That makes it much harder to get rid of them all. Also, should you decide to remove one tool, they may put the pricing up on the others as a disincentive.

We generally recommend buying best-of-breed technologies and reviewing the situation every 2 to 3 years to ensure that you have the best technology at the best price.

Next steps

A planned and pragmatic vulnerability management programme will constantly review an organisation’s current status quo. Providing a roadmap on how to measure and improve security in your organisation.

What is security maturity and how can S4 Applications help you enhance your security posture?

Read our blog: Assess your security Posture with our Security Maturity Model.